Snowplow Fractribution dbt package

The package source code can be found in the snowplow/dbt-snowplow-fractribution repo, and the docs for the macro design are here. If you would like to follow the Fractribution accelerator, which includes sample data, see here

The Fractribution Package is no longer maintained, please refer to the Attribution package for marketing attribution analysis with Snowplow

The Fractribution package is dependent on the snowplow_web_page_views model created by the snowplow_web dbt package. Run snowplow_web if you do not have data in the snowplow_web_page_views table for the period of time you will run fractribution for.

Overview

The Snowplow Fractribution dbt package is a tool to perform Attribution Modeling. The name itself comes from Fractional attribution, which allows you to attribute the value of a conversion to one or more channels depending on the conversion pathway. As a result, it becomes possible to determine the revenue per channel, as well as ROAS (Return On Ad Spend - the amount of revenue that is earned for every dollar spent on advertising) once you have cost data for each marketing channel.

While the throughout this package we use the term channel, it is entirely possible to define this in terms of something else e.g. a campaign, and define the spend and classification in the channel_spend and channel_classification macros respectively.

This package consists of a series of dbt models that produce the following tables:

snowplow_fractribution_channel_counts: Number of sessions grouped by channel, campaign, source and mediumsnowplow_fractribution_channel_spend: Spend on each channel, used in ROAS calculationssnowplow_fractribution_conversions_by_customer_id: Conversion revenue for each conversion, along with the associatedcustomeridsnowplow_fractribution_path_summary: Summary of different path combinations and associated conversion/non-conversionssnowplow_fractribution_paths_to_conversion: Path combinations leading to conversionsnowplow_fractribution_paths_to_non_conversion: Path combinations leading to non-conversionsnowplow_fractribution_sessions_by_customer_id: Channel information by session timestamp, where an event timestamp is considered as the session start

Once the models are generated, the next step is to run a python script which is included in the package to run the attribution calculations. You can run this locally, via a docker image, or using Snowpark if you are on Snowflake. In the Quick Start section you will find a step-by-step guide on how to operate the package as a whole.

This script will generate and populate the following three additional tables:

snowplow_fractribution_channel_attribution: The main output table that shows conversions, revenue, spend and ROAS per channelsnowplow_fractribution_path_summary_with_channels: The conversion and revenue attribution per channel (used to create the report table)snowplow_fractribution_report_table: An intermediate table that shows, for each unique path, a summary of conversions, non conversions and revenue, as well as which channels were assigned a contribution

Intra-session Channels

In Google Analytics (Universal Analytics) a new session is started if a campaign source changes (referrer of campaign tagged URL). Snowplow utilizes activity based sessionization, rather than campaign based sessionization. Setting consider_intrasession_channels to false will take only the campaign information from the first page view in a given Snowplow session, and not give credit to other channels in the converting session if they occur after the initial page view.

Filter unwanted / wanted channels

You can specify a list of channels for the variable snowplow__channels_to_exclude to exclude them from analysis (if kept empty all channels are kept). For example, users may want to exclude the 'Direct' channel from the analysis.

You can also do the opposite, filter on certain channels to include in your analysis. You can do so by specifying them in the list captured within the variable snowplow__channels_to_include.

Path Transform Options

Paths to conversion are often similar, but not identical. As such, path transforms reduce unnecessary complexity in similar paths before running the attribution algorithm.

exposure (default): the same events in succession are reduced to one:A → A → BbecomesA → B, a compromise between first and uniqueunique: all events in a path are treated as unique (no reduction of complexity). Best for smaller datasets (small lookback window) without a lot of retargetingfirst: keep only the first occurrence of any event:A → B → AbecomesA → B, best for brand awareness marketingfrequency: keep a count of the events’ frequency:A → A → BbecomesA(2) → B, best when there is a lot of retargetingremove_if_last_and_not_all: requires a channel to be added as a parameter, which gets removed from the latest paths unless it removes the whole path as it is trying to reach a non-matching channel parameter: E.g target element:Apath:A → B → A → AbecomesA → Bremove_if_not_all: requires a channel to be added as a parameter, which gets removed from the path altogether unless it would result in the whole path's removal: E.g target element:Apath:A → B → A → AbecomesB

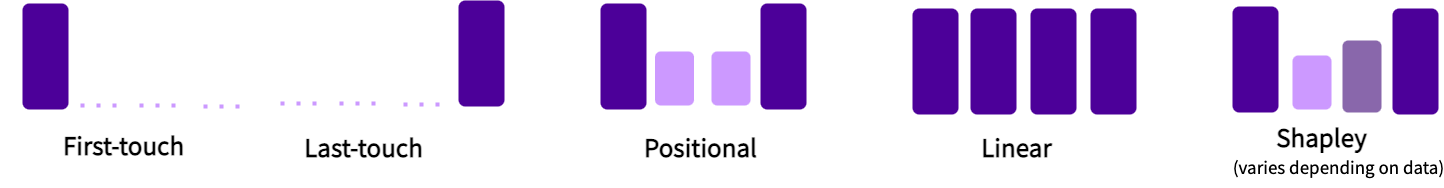

Attribution Models

The package currently offers 5 different attribution models, that can be chosen by setting the attribution_model flag when calling the python script.

shapley(default): Takes the weighted average of the marginal contributions of each channel to a conversionfirst_touch: Assigns 100% attribution to the first channel in each path.last_touch: Assigns 100% attribution to the last channel in each path.position_based: The first and last channels get 40% of the credit each, with the remaining channels getting the leftover 20% distributed evenly.linear: Assigns attribution evenly between all channels on the path.

Package Macros

To overwrite these macros correctly with those in your project, ensure you prefix the macro name by default__ in the definition e.g.

{% macro default__conversion_value() %}

tr_total/100

{% endmacro %}

conversion_clause macro

The

conversion_clausemacro specifies how to filter Snowplow events to only succesfful conversion events. How this is filtered will depend on your definition of a conversion. The default is filtering to events wheretr_total > 0, but this could instead filter onevent_name = 'checkout', for example. If you are using the e-commerce model, you will still need to set this for the fractribution code to run (even though all events are conversions in the e-commerce model), in this case change it totransaction_revenue > 0.

conversion_value macro

The

conversion_valuemacro specifies either a single column or a calculated value that represents the value associated with that conversion. The default istr_total, but revenue or a calculation using revenue and discount_amount from the default e-commerce schema, for example, could similarly be used.

channel_classification macro

The

channel_classificationmacro is used to perform channel classifications. This can be altered to generate your expected channels if they differ from the channels generated in the default macro. It is highly recommended that you examine and configure this macro when using your own data, as the ROAS calculations and attribution calculations will run against these channel definitions, and the default values will not consider any custom marketing parameters.

channel_spend macro

The

channel_spendmacro is used to query the spend by channels. It requires a user supplied SQL script to extract the total ad spend by channel.Required output format:

channel: STRING NOT NULLspend: FLOAT64 (or other warehouse equivalent) (Use the same monetary units as conversion revenue, and NULL if unknown.)

Environment Variables

For the script or docker container to run you need to set some environment variables that can be accessed to connect to your warehouse. These variables vary by warehouse. If you are using Docker, it can be easier to set these in a file that you reference when you run the container. See the Quick Start for detailed running information.

- BigQuery

- Databricks

- Snowflake

- Redshift

project_id: Project id of your BigQuery warehousebigquery_dataset: Dataset for your derived tables For the python script only (for Docker you mount this as a volume at run time):google_application_credentials: Google Service Account JSON file

databricks_schema: Schema for your derived tablesdatabricks_server_hostname: Databricks server hostnamedatabricks_http_path: Databricks compute resources URLdatabricks_token: Personal Access Token

snowflake_account: Snowflake account IDsnowflake_user: Snowflake usernamesnowflake_password: Snowflake passwordsnowflake_user_role: Snowflake rolesnowflake_warehouse: Snowflake warehousesnowflake_database: Snowflake databasesnowflake_schema: Schema for your derived tables

redshift_host: Redshift host urlredshift_database: Redshift databaseredshift_port: Redshift port (likely5439)redshift_user: Redshift userredshift_password: Redshift passwordredshift_schema: Redshift schema

All warehouses require the following if you are using the docker container:

conversion_window_start_date: The start date of your conversion window, in the format of YYYY-MM-DDconversion_window_end_date: The end date of your conversion window, in the format of YYYY-MM-DDattribution_model: The attribution model methodwarehouse: The warehouse to run on, one ofsnowflake,bigquery,redshift, ordatabricks

Differences to Google's Fractribution

There are some changes from Google's Fractribution code that have been noted below.

- Temporary UDFs have been converted to persistent / permanent UDFs

- Some temporary tables converted to permanent tables

- Users without a user_id are treated as 'anonymous' ('f') users and the domain_userid is used to identify these sessions

- Users with a user_id are treated as identified ('u') users

- Templating is now run almost entirely within dbt rather than the custom SQL / Jinja templating in the original Fractribution project

- Channel changes and contributions within a session can be considered using the

consider_intrasession_channelsvariable