Conclusions and next steps from the Kafka live viewer profiles accelerator

In this tutorial, you've explored the live viewer profiles solution accelerator for video streaming, gaining practical insights into building, deploying, and extending real-time, event-driven architectures using Snowplow and Kafka.

You have successfully built a real time system for processing event data including:

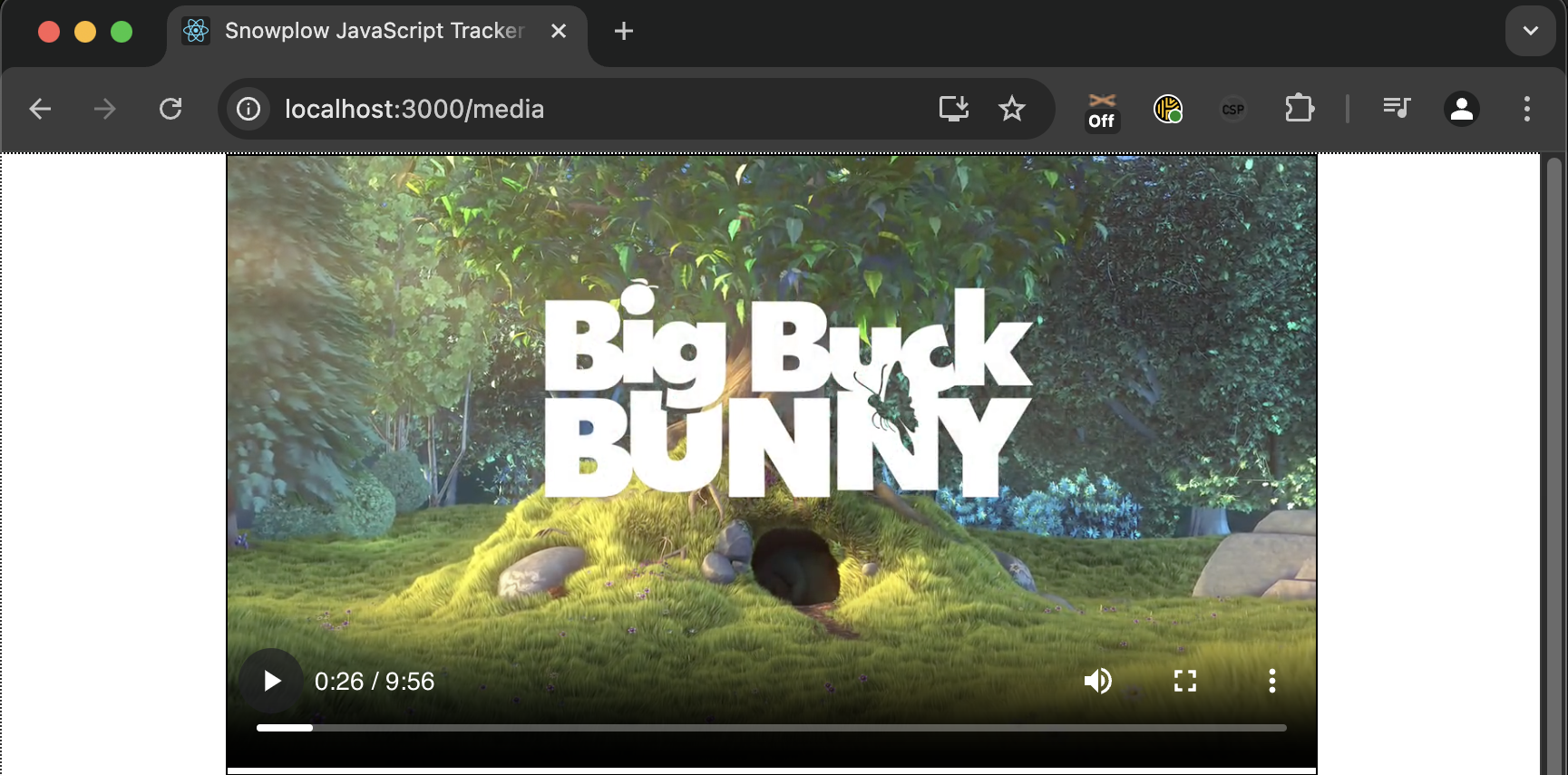

- Web tracking application for collecting media events

- Snowplow Collector and Snowbridge for event processing and forwarding

- Live Viewer back-end for managing real-time data with Kafka and DynamoDB

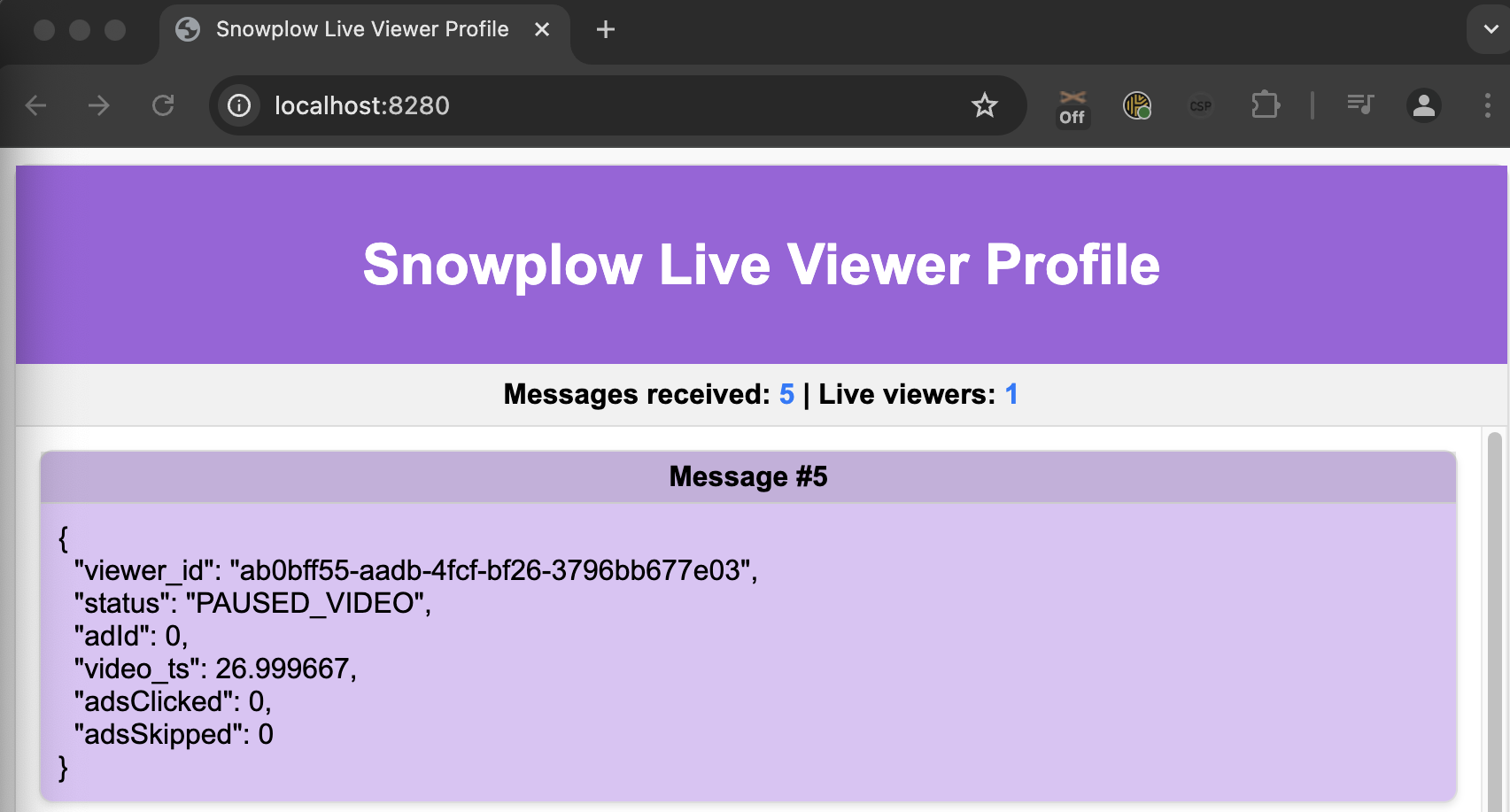

- Live Viewer front-end for visualizing real-time user activity on the web tracking application

This architecture highlights how real-time insights can be achieved using event-driven systems in a streaming context.

What you achieved

You explored how to:

- Use LocalStack to emulate AWS services for local development and testing

- Launch and interact with the system components, such as the Kafka UI and LocalStack UI

- View and verify the real-time event data from the browser using Snowplow's media tracking capabilities

- Deploy the solution in an AWS environment using Terraform

This tutorial can be extended to utilize Snowplow event data for other real-time use cases, such as:

- Web Engagement analytics

- Personalized recommendations

- Ad performance tracking

Next steps

- Extend tracking: extend the solution to track more granular user interactions or track on a new platform such as mobile

- Extend dashboard: extend the Live Viewer to include information on the media being watched and the user

- Replace the state store: replace Amazon DynamoDB with an alternative to be cloud agnostic, e.g. Google Bigtable or MongoDB

By completing this tutorial, you're equipped to harness the power of event-driven systems and Snowplow's analytics framework to build dynamic, real-time solutions tailored to your streaming and analytics needs.